Me and a couple colleagues engaging in our ceremonial preparation for running scheduled tasks. The robes chafe but not as bad as the tasks.

In many ways I like windows powershell more than bash and even powershell remoting over SSH. Please don't hate me. However, in spite of some of the clever things you can do with treating remote sessions as objects and manipulating them as such in powershell, its all fun and games until you start getting HResults thrown in your face trying to do something you'd think was the poster child use case for remoting like installing windows updates on a remote machine.

In this post I'm going to discuss:

- Some common operations, that I am aware of, that can cause one to get into trouble automating remotely on windows

- Approaches for working around these issues

- Using a tool like Boxstarter on 100% windows automation or the Boxstarter cookbook in chef runs on Test-Kitchen, Chef Provisioning or Vagrant provisioning where WinRM is the transport mechanism

To be clear 95% of all things local can be done remotely without incident on windows if not more. This post gives voice to the remaining 5%.

Things that don't work

This may come as a surprise to those used to working over ssh where things pretty much behave just as they do locally, but in the world of remote shells on windows, there are a few gotchas that you should be aware of. Quickly here are the big ones:

- Working with the windows update interfaces simply don't work

- Accessing network resources like network shares, databases or web sites that normally leverage your current windows logon context will fail unless using the correct authentication protocol

- Installing MSIs or other installers that depend on either of the above resources (SQL Server, most .Net Framework installers) will not install successfully

- Accessing winrm client configuration information like max commands per shell and user, max memory per shell, etc. on windows OS versions below win 8/2012 result in Access Denied errors.

What does failure look like?

I can say this much. Its not pretty.

Windows update called in an installer

Lets try to install the .net framework v 4.5.2. I'm going to do this via a normal powershell remoting session on windows v 8.1 that ships with .net v 4.5.1 but if you are not on a windows box, you can certainly follow along by running this through the WinRM ruby gem or embedding it in a Chef recipe:

function Get-HttpToFile ($url, $file){

Write-Verbose "Downloading $url to $file"

if(Test-Path $file){Remove-Item $file -Force}

$downloader=new-object net.webclient

$wp=[system.net.WebProxy]::GetDefaultProxy()

$wp.UseDefaultCredentials=$true

$downloader.Proxy=$wp

try {

$downloader.DownloadFile($url, $file)

}

catch{

if($VerbosePreference -eq "Continue"){

Write-Error $($_.Exception | fl * -Force | Out-String)

}

throw $_

}

}

Write-Host "Downloading .net 4.5.2.."

Get-HttpToFile `

"http://download.microsoft.com/download/B/4/1/B4119C11-0423-477B-80EE-7A474314B347/NDP452-KB2901954-Web.exe"`

"$env:temp\net45.exe"

Write-Host "Installing .net 4.5.2.."

$proc = Start-Process "$env:temp\net45.exe" `

-verb runas -argumentList "/quiet /norestart /log $env:temp\net45.log"`

-PassThru

while(!$proc.HasExited){ sleep -Seconds 1 }

This should fail fairly quickly. A look at the log file - the one specified in the installer call (note that this will be output in html format and given an html extension) reveals the actual error:

Final Result: Installation failed with error code: (0x00000005), "Access is denied."

If you investigate the log further you will find:

WU Service: EnsureWUServiceIsNotDisabled succeeded Action: Performing Action on Exe at C:\b723fe7b9859fe238dad088d0d921179\x64-Windows8.1-KB2934520-x64.msu Launching CreateProcess with command line = wusa.exe "C:\b723fe7b9859fe238dad088d0d921179\x64-Windows8.1-KB2934520-x64.msu" /quiet /norestart

Its trying to download installation bits using the Windows Update service. This not only occurs in the "web installer" used here but also the full offline installer as well. Note that this script should run without incident locally on the box. So quit your crying and just logon to your 200 web nodes and run this. What's the freaking problem?

So its likely that the .net version you plan to run is pre baked in your base images already, but what this illustrates is that regardless of what you are trying to do, there is no guarantee that things are going to work or even fail in a comprehensible manner. Even if everyone knows what wusa.exe is and what an exit code of 0x5 signifies.

No access to network resources

To quickly demonstrate this, I'll list the C:\ drive of my host computer from a local session using a Hyper-V console:

PS C:\Windows\system32> ls \\ultrawrock\c$

Directory: \\ultrawrock\c$

Mode LastWriteTime Length Name

---- ------------- ------ ----

d---- 1/15/2015 9:32 AM chef

d---- 12/12/2014 12:51 AM dev

d---- 9/10/2014 3:44 PM Go

d---- 11/5/2014 7:24 PM HashiCorp

d---- 12/10/2014 12:13 AM Intel

d---- 11/16/2014 11:10 AM opscode

d---- 11/4/2014 5:23 AM PerfLogs

d-r-- 1/10/2015 4:30 PM Program Files

d-r-- 1/17/2015 1:11 PM Program Files (x86)

d---- 12/11/2014 12:33 AM RecoveryImage

d---- 11/16/2014 11:40 AM Ruby21-x64

d---- 12/11/2014 10:26 PM tools

d-r-- 12/11/2014 12:31 AM Users

d---- 12/26/2014 5:24 PM Windows

Now I'll run this exact same command in my remote powershell session:

[192.168.1.14]: PS C:\Users\Matt\Documents> ls \\ultrawrock\c$

ls : Access is denied

+ CategoryInfo : PermissionDenied: (\\ultrawrock\c$:String) [Get-ChildItem], UnauthorizedAccessException

+ FullyQualifiedErrorId :

ItemExistsUnauthorizedAccessError, Microsoft.PowerShell.Commands.GetChildItemCommand

ls : Cannot find path '\\ultrawrock\c$' because it does not exist.

+ CategoryInfo : ObjectNotFound: (\\ultrawrock\c$:String) [Get-ChildItem], ItemNotFoundException

+ FullyQualifiedErrorId : PathNotFound,Microsoft.PowerShell.Commands.GetChildItemCommand

Note that I am logged into both the local console and the remote session using the exact same credentials.

It should be pretty easy to see how this could happen in many remoting scenarios.

Working around these limitations

To be clear, you can install .net, install windows updates and access network shares remotely on windows. Its just kind of like Japanese Tea Ceremony meets automation but stripped of beauty and cultural profundity. You are gonna have to pump out a bunch of boiler plate code to accomplish what you need.

What?...I'm not bitter.

Solving the double hop with CredSSP

This solution is not so bad but will only work for 100% windows scenarios using powershell remoting (as far as I know). That may likely work for most but breaks if you are managing windows infrastructure from linux (read on if you are).

You need to create your remote powershell session using CredSSP authentication:

Enter-PSSession -ComputerName MyComputer `

-Credential $(Get-Credential user) `

-Authentication CredSSP

This also requires CredSSP to be enabled on both the host (client) and guest (server):

Host:

Enable-WSManCredSSP -Role Client -DelegateComputer * -Force

This states I can delegate my credential via any server. I could also provide an array of hosts to allow.

Guest:

Enable-WSManCredSSP -Role Server -Force

If you are not in a windows domain, you must also edit the local Group Policy (gpedit from any command line) on the host and allow delegating fresh credentials:

After invoking the Group Policy Editor with gpedit.msc, navigate to Local Computer Policy/Computer Configuration/Administrative Templates/System/Credential Delegation. Then select Allow delegating fresh credentials in the right pane. In the following window, make sure this policy is enabled and specify the servers to authorize in the form of "wsman/{host or IP}". The hosts can be wild carded using domain dot notation. So *.myorg.com would effectively allow any host in that domain.

In case you want to automate the clicking and pointing, see this script I wrote that does just that.

Now here is a kicker: you cant use the Enable-WSManCredSSP cmdlet in a remote session. The server needs to be enabled locally. Thats ok. You could use the next work around to get around that.

Run locally with Scheduled Tasks

This is a fairly well known and somewhat frequent work around to get by this whole dilemma. I'll be honest here, I think the fact that one has to do this to accomplish such routine things as installing updates is ludicrous and I just don't understand why Microsoft does not remove this limitation. Unfortunately there is no way for me to send a pull request for this.

As we get into this, I think you will see why I say this. Its a total hack and a general pain in the butt to implement.

A scheduled task is essentialy a bit of code you can schedule to run in a separate process at a single time or interval. You can invoke them to run immediately or upon certain events like logon. You can provide a specific identity under which the task should run and the task will run as if that identity is logged on locally. There is full GUI interface for maintaining and creating them as well as a command line interface (schtasks) and also a set of powershell cmdlets in powershel v3.0 forward.

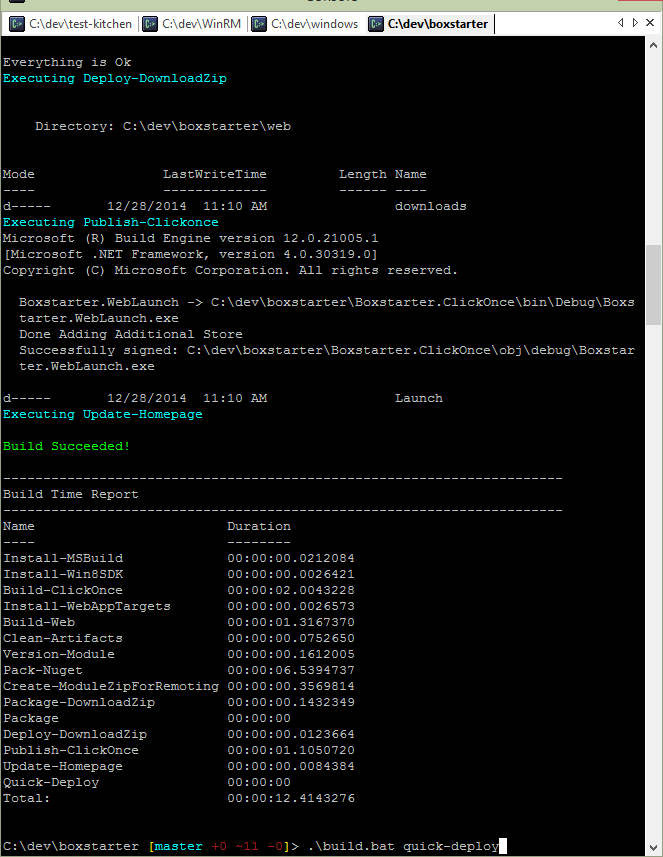

To demonstrate how to create, run and remove a task, I'll be pulling code from Boxstarter, an OSS project I started to address windows environment installs. Boxstarter uses the schtasks executable to support earlier powerhell versions (pre 3.0) before the cmdlets were created.

Creating a scheduled task

schtasks /CREATE /TN 'Temp Boxstarter Task' /SC WEEKLY /RL HIGHEST `

/RU "$($Credential.UserName)" /IT `

/RP $Credential.GetNetworkCredential().Password `

/TR "powershell -noprofile -ExecutionPolicy Bypass -File $env:temp\Task.ps1" `

/F

#Give task a normal priority

$taskFile = Join-Path $env:TEMP RemotingTask.txt

Remove-Item $taskFile -Force -ErrorAction SilentlyContinue

[xml]$xml = schtasks /QUERY /TN 'Temp Boxstarter Task' /XML

$xml.Task.Settings.Priority="4"

$xml.Save($taskFile)

schtasks /CREATE /TN 'Boxstarter Task' /RU "$($Credential.UserName)" `

/IT /RP $Credential.GetNetworkCredential().Password `

/XML "$taskFile" /F | Out-Null

schtasks /DELETE /TN 'Temp Boxstarter Task' /F | Out-Null

This might look a little strange so let me explain what this does (see here for original and complete script). First it uses the CREATE command to create a task that runs under the given identity to run whatever script is in Task.ps1. One important parameter here is /RL, the Run Level. This can be set to Highest or Limited. We want to run with highest privileges. Finally, note the use of /IT - interactive. This is great for debugging. If the identity specified just so happens to be logged into a interactive session when this task runs, any GUI elements will be seen by that user.

Now for some reason the schtasks CLI does not expose the priority to run the task with. However you can serialize any task to XML and then manipulate it directly. I found that this was important for Boxstarter which often invoked immediately after a fresh OS install. Things like Windows Updates or SCCM installs quickly take over and Boxstarter may get significantly delayed waiting for its turn so it at least asks to run with a normal priority.

After we save this file, that's not enough to simply change the priority. We now have to recreate a new task based on that XML using schtasks otherwise our identity is lost. Boxstarter will create this task once and then reuse it for any command it needs local rights for. It then deletes it in a finally block when it is done.

Running the Scheduled Task

I'm not going to cover all of the event driven mechanics or interval syntax since I am really referring to the running of ad hoc tasks. To actually cause the above task to run is simple:

$taskResult = schtasks /RUN /I /TN 'Boxstarter Task'

if($LastExitCode -gt 0){

throw "Unable to run scheduled task. Message from task was $taskResult"

}

Since schtasks is a normal executable, we check the exit code to determine if it was successful. Note that this does not indicate if the script that the task runs is successful, it simply indicates that the task was able to be launched. For all we know the script inside the task fails horribly. The /I argument informs RUN to run immediately.

I'm going to spare all the code details for another post, but boxstarter does much more than just this when running the task. At the least you'd want to know when the task ends and have access to output and error streams of that task. Boxstarter finds the process, pumps its streams to a file and interactively reads from those streams back to the console. It also includes hang detection logic in the event that the task gets "stuck" like with a dialog box and is able to kill the task along with all child processes. You can see that code here in its Invoke-FromTask command.

An example usage of that function is when Boxstarter installs .net 4.5 on a box that does not already have it:

if(Get-IsRemote) {

Invoke-FromTask @"

Start-Process "$env:temp\net45.exe" -verb runas -wait `

-argumentList "/quiet /norestart /log $env:temp\net45.log"

"@

}

This will block until the task completes and ensure stdout is streamed to the console and stderr is captured and bubbled back to the caller.

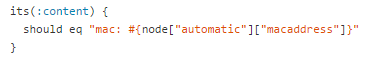

The Boxstarter cookbook for x-plat use

I developed Boxstarter with a 100% windows world in mind. That was my world then but my world is now mixed. I wanted to leverage some of the functionality in boxstarter for my chef runs without rewriting it (yet). So I created a Chef Boxstarter Cookbook that could install the powershell modules on a converging chef node and convert any block of powershell in a recipe into a Boxstarter "package" (a chocolatey flavored package) that can run inside of a boxstarter context within a chef client run. This can be placed inside a client run launched from Test-Kitchen or Chef-Provisioning both which can run via WinRM on a remote node. One could also use it to provision vagrant boxes with the chef zero provisioner plugin.

Here is an example recipe usage:

include_recipe 'boxstarter'

default['boxstarter']['version'] = "2.4.159"

boxstarter "boxstarter run" do

password default['my_box_cookbook']['my_secret_password']

disable_reboots false

code <<-EOH

Enable-RemoteDesktop

Disable-InternetExplorerESC

cinst console2

cinst fiddler4

cinst git-credential-winstore

cinst poshgit

cinst dotpeek

Install-WindowsUpdate -acceptEula

EOH

end

You can learn more about boxstarter scripts at boxstarter.org but they can contain ANY powershell and have the chocolatey modules loaded so all chocolatey commands exist and also expose some custom boxstarter commands for customizing windows settings (note the first two commands) or installing updates. Boxstarter will detect pending reboots and unless asked otherwise, it will reboot upon detecting a pending reboot - bad for production nodes but great for a personal dev environment.

A proof of concept and a bit rough

The boxstarter cookbook is still rough around the edges. It does what I need it to do and I have not invested much time in it. The output handling over WinRM is terrible and it needs more work making sure errors are properly bubbled up.

At any rate, this post is not intended to be a plug for boxstarter but it demonstrates how to get around the potential perils one may encounter inside of a remote windows session either in powershell directly or from raw WinRM from linux.